delft

vision & depiction

february 2024

What a great conference ‐ all things on perception, images, form, art, and visualization. I had the pleasure to present my work on visualizing three-dimensional gaze data in architecture, with excellent feedback and interest from so many wonderful speakers. I also had the fortune of learning about a wonderful Dutch artist, Jan Schoonhoven … I'll definitely be incorporating more of his work into mine. My sincerest gratitude to the organizers, Maarten Wijntjes and Catelijne van Middelkoop, for the invitation to present.

related entries:

point clouds

in Tuskegee

september 2023

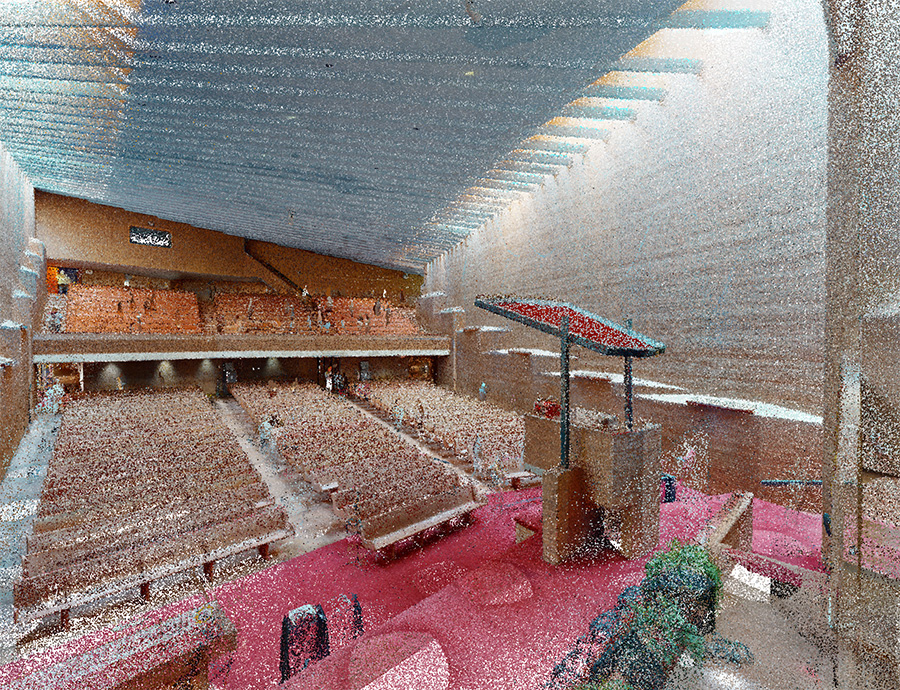

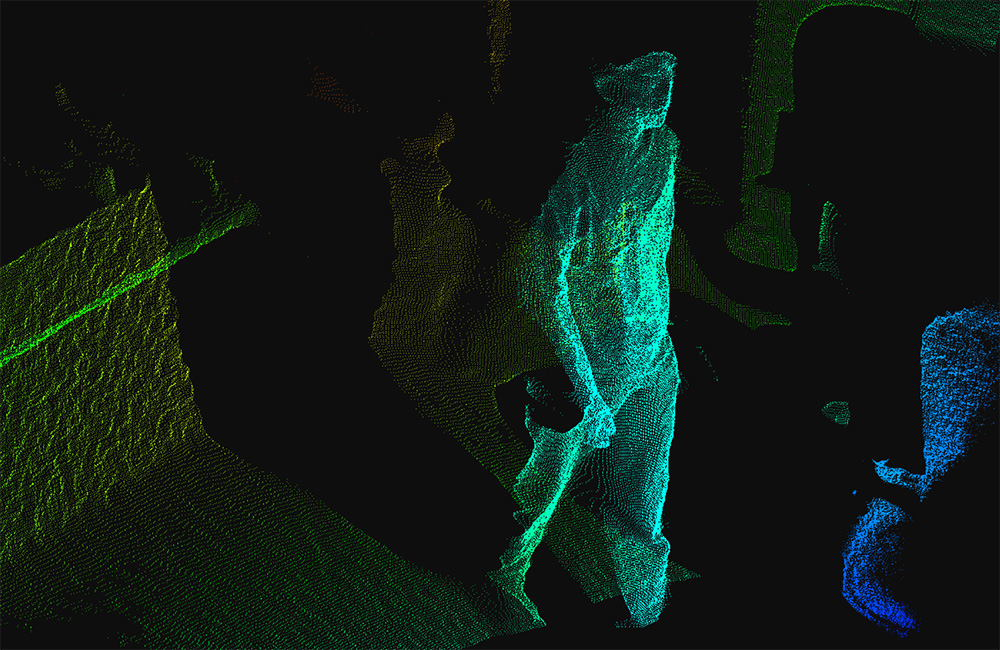

Continuing my on integrating mobile eye tracking with LiDAR scanning, I was granted permission to scan Tuskegee Chapel (Tuskegee University, Alabama), as well as capture a handful of viewers' engagement around its various spaces. The chapel is deceptively labyrinthine ‐ besides the narthex and nave, there were endless passages and hidden rooms that curl around the four corner staircases. It was a wonderful project with even more wonderful staff. Particular thanks to Ms. Jennifer L. Hileman of Tuskegee University for your invaluable insight!

related entries:

point clouds

& eye tracking

may 2023

While much of my eye‐tracking research on images looks to reveal the depth enacted by our perception, there is a special degree of perceptual simultaneity afforded by spatial eye tracking. I've just finished scanning Lehigh's Linderman Library using a hybrid LiDAR and photographic scanner, and will soon be collecting visitor engagement data to then produce novel visualizations. Some interesting potential, especially with the inherent 'ghosting' of human figures inherent in this process. That's me in the corner...

related entries:

integrated tracking

april 2022

It's been a long time coming, but I've finally been able to conduct a slew of tests that integrate a number of technologies in order to wholistically map out the physical comportment of viewing images at relatively larger scale (at least larger than a standard desktop monitor). Technologies include mobile eye‐tracking spectacles, a head‐mounted VSLAM unit, and three synchronized RGB+D cameras. The biggest hurdle turned out to be a very 'analog' constraint ‐ finding a room to store and conduct tests over the span of a few weeks.

related entries:

monitor space vs gallery space

voxel modeling

december 2021

Following on my research for mapping gaze points around free-standing objects, I am developing a series of voxel-based physical models that illustrate the perceptual biases of visual attention. Right now, the main hurdle involves developing strategies for managing thousands of individual voxel sets. As well, I’m still figuring out optimal resin mixes that best illustrate distributed point densities.

SPARKS talk at

SIGGRAPH DAC

october 2021

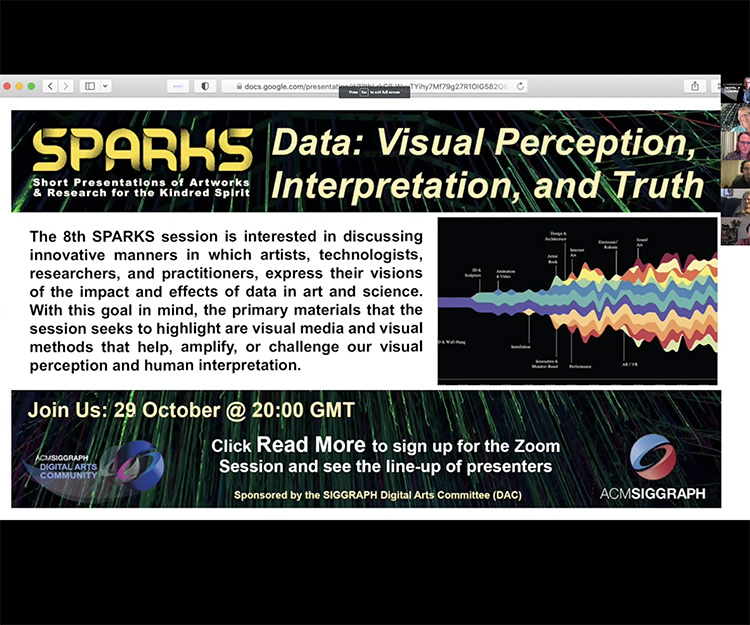

I had the pleasure of participating in a SPARKS Lightning Talk in October, in which I presented my current work on Spatial Eye-Tracking within the context of session brief, "Data" Visual Perception, Interpretation, and Truth. "

spatial eye‐tracking

august 2021

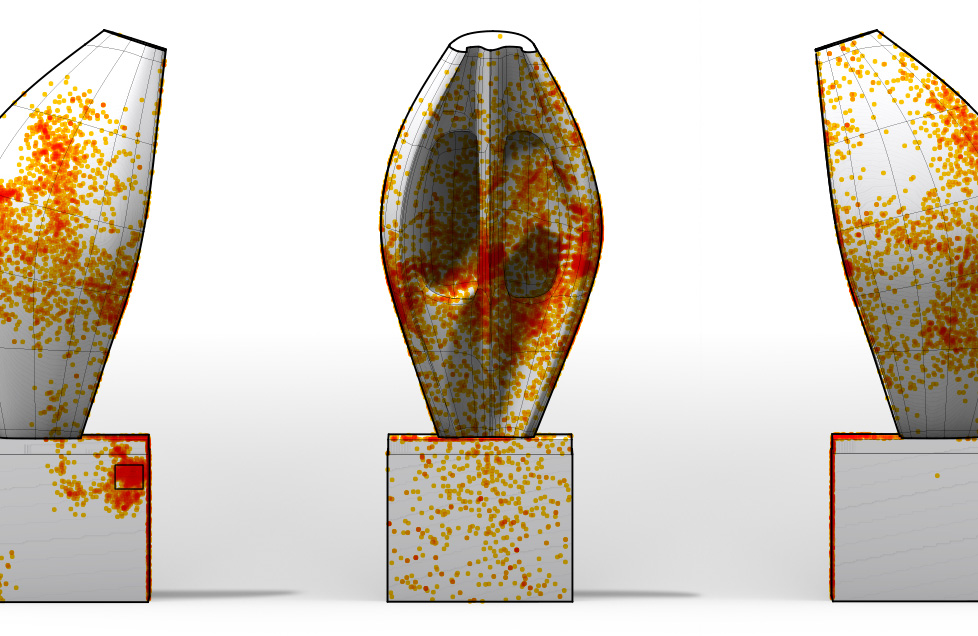

In a project that outlines basic techniques for spatial eye‐tracking, I am developing various forms of visualizing the biased regard of the eye in three‐dimensions. This research utilizes methods including conversion from conventional two‐dimensional eye‐tracking standards into three‐dimensions, as well as exploiting mesh‐based modeling parameters.

related entries:

spatial eye-tracking

forms of spatial eye-tracking

large-scale MSLA

june 2021

For a study on spatial eye‐tracking, I am reconstructing sculptures from high‐resolution scans, and physically producing them using a 3D printer (Peopoly Phenom L). Once these are printed, they will then be assembled and finished by hand, and finally used as 'stimuli' for eye‐tracking tests. I'm quickly finding that printing at large-scale MSLA comes with a lot more issues to consider than when using your standard desktop MSLA printer (in my case, a couple Elegoo printers).

related entries:

spatial eye-tracking

eye-tracking with

photogrammetry

april 2021

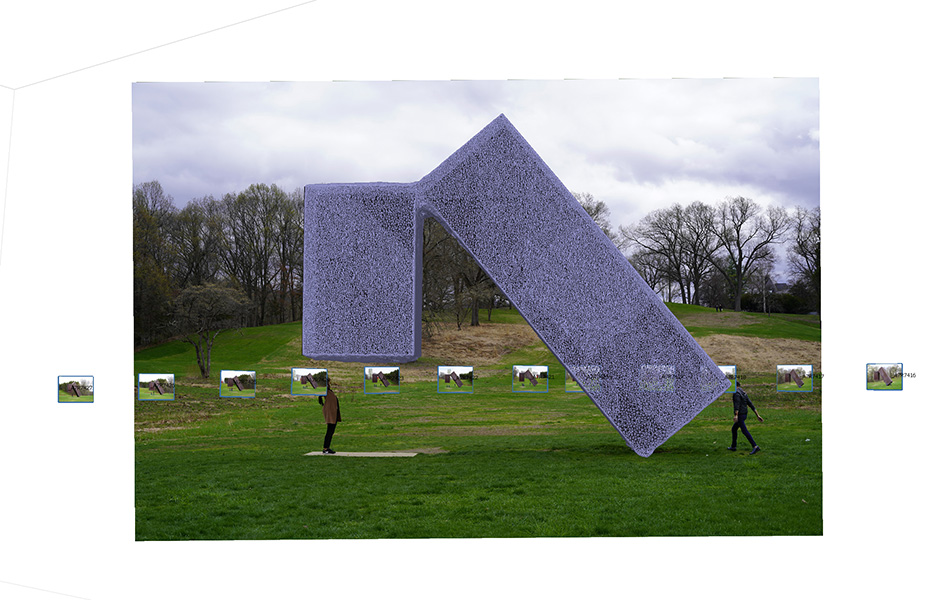

I've been developing methods for integrating eye‐tracking research with three‐dimensional objects. To further enable this process, I'm refining methods for photogrammetry for the specific application towards 'pulling' gaze points onto object geometry. At the moment, I'm capturing large-scale sculptures ‐ what better site than Storm King Art Center.

related entries:

digital approaches

to art history

march 2021

In a conference hosted by the University of Oxford and Durham University, I presented a paper on my developing work on the integration of various techniques for spatial eye‐tracking. The paper outlined both the technical premises, as well as the projected potentials, of such techniques. For the latter, these included expanding the innumerable studies on traditional, two‐dimensional eye‐tracking, within the domain of three‐dimensional space. More than a mere addition of another dimension, the recording of an individual's bodily movement affects a deeper and more complex chain of considerations regarding a network between the physical space of a work of art, the gaze of a viewer, and the bodily movements that enable their gaze.

related entries:

spatial eye-tracking

keeping up

february 2021

As mentioned in the previous entry, I'm recording the movements of a hurdler in action by using a depth camera. To maintain a constant distance between the hurdler and the recording apparatus, I've retrofitted a Segway Ninebot Max to carry all scanning devices, including a tablet, an Azure Kinect, and batteries for running all devices. During recording, care has to be taken not to introduce too much tilt on the scooter to ensure that the hurdler remains within the camera frame. And, as it turns out, the top speed of the scooter at 31kmph is barely fast enough for this particular application.

related entries:

body tracking

w/ Azure Kinect DK

february 2021

I'm putting together a research project that involves mapping the movements of a hurdler along a straightaway track. In order to capture the constantly changing poses, in lieu of any elaborate motion-capture, I'm testing the viability of simply using a single Azure Kinect DK (on wheels).

So far, this has involved using Microsoft's Azure Kinect SDK along with their Body Tracking SDK. For the sake of immediate setup and testing, movements are recorded using Microsoft's k4arecorder.exe, while asynchronous translation of captured depth frames is achieved with a bespoke application involving offline conversion of a .mkv file into a .json file. Thereafter, visualizations are drawn in Rhino using a custom-written script made in Python which simply converts .json entries into Python-legible lists.

Findings from this project will be updated in a future research entry.

related entries:

depth cameras

intel vs microsoft

january 2021

Integral to spatial eye-tracking, it is important that I'm able to reproduce sculptures and other large objects with relative accuracy using digital modeling. To aid in this process, I've been testing different hand-held depth cameras in conjunction with point-cloud modeling software. As opposed to larger and more expensive devices specifically catered to 3D-scanning, the devices being tested are designed for more mobile and ad-hoc testing environments (e.g. autonomous agents that require spatial mapping). At the moment I'm comparing the results from Intel's Realsense D435 (rubbish) and D455 (pretty good) along with Microsoft's Azure Kinect DK (aka Kinect v.2).

My findings are incorporated within a number projects included within the research section of this site, and I'm looking to provide a side-by-side comparison of results later in the year.

related entries:

casting nefertiti

january 2021

After months of 3D-printing and assembling separate panels of the Nefertiti Bust, I've finally got around to producing a plaster cast by creating a silicone mold accompanied by a fiber-reinforced shell structure. This was the first time using Hydrocal White, and so far the results are successful. The latest cast still requires manual refinement of seams with some tedious sanding, but it looks like it will usable as a testing stimulus for spatial eye-tracking. This replica stands at almost 20 inches tall and weighs a ton (or closer to 55 lbs).

It was produced for a forthcoming research project that is expected to be drafted towards Q3 of 2021.

related entries:

forms of spatial eye tracking

mapping coplay

kilns

december 2020

As part of gathering recordings for large-scale stimuli for mapping spatial eye-tracking, I visited the Coplay Cement Kilns in Saylor Park, located in Coplay Pennsylvania. Comprised of 9 vertical kilns, these used to be housed within a cement factory.

The total walking time totaled six minutes on average. Surprisingly, the SLAM device had picked up the changing topography with surprising accuracy, and suggested a new avenue of research in which large spaces could be quickly mapped out using SLAM, and thereafter refined using landmark references.

The data generated from this site visit will contribute to development in

spatial eye-tracking.

related entries:

spatial eye-tracking

common form site

december 2020

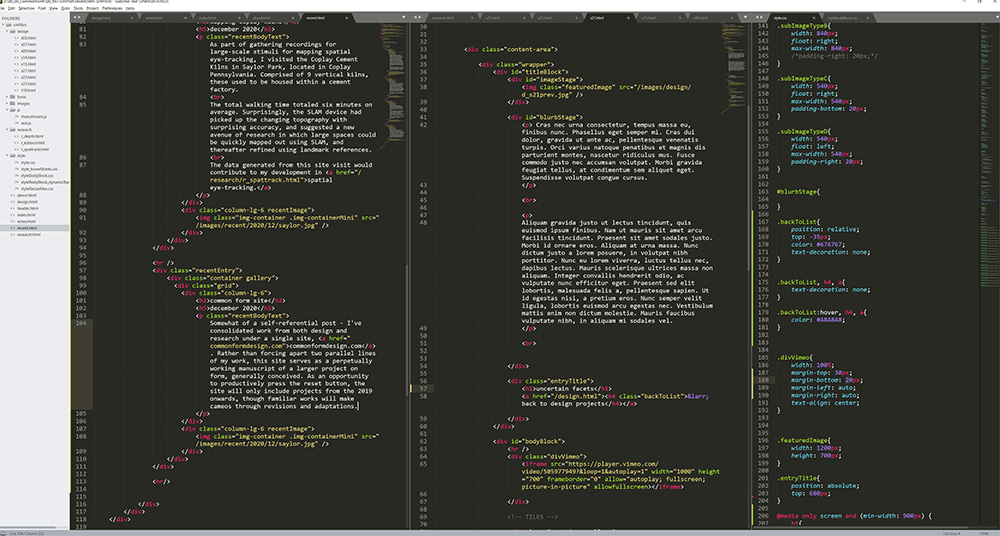

Somewhat of a self-referential post - I've consolidated work from both design and research under a single site, commonformdesign.com. Rather than forcing apart two parallel lines of my work, this site serves as a perpetually working manuscript of a larger project on form, generally conceived. As an opportunity to productively press the reset button, the site will include non-commercial projects primarily from the 2018 onwards, though familiar works will make cameos through revisions and adaptations.

related entries: